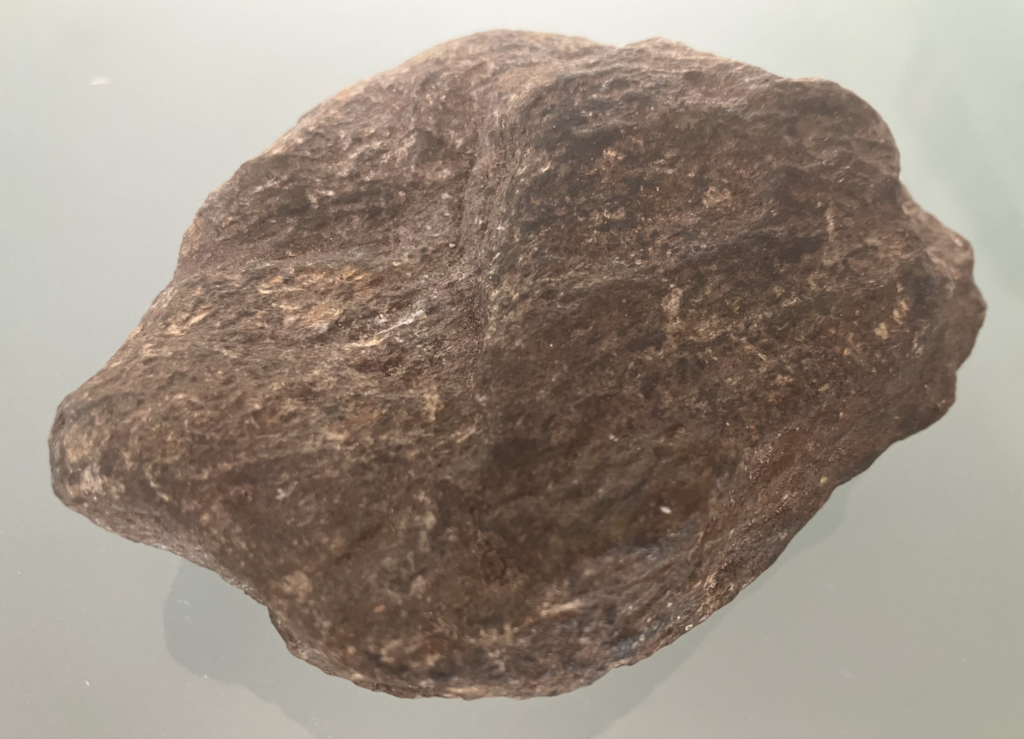

Fifty one point six of the two thousand two hundred miles of the Appalachian Trail wind through the northwest corner of Connecticut. Near Salisbury, south of the Massachusetts border, the route traces the spine of the Taconic mountains. The range is rounded and worn down, but still holds its own. I picked up a rock. Now it’s sitting on my desk.

It’s a piece of schist, metamorphosed sediment that partially crystallized roughly 450 million years ago in a zone that was subject to intense folding from the pressures brought on by an island arc accreting onto the continental margin of what later became North America. Holding the rock imparts the low-grade thrill of connection to the Ordovician, a word, which like Silurian, conjures crinoids and trilobites; furtive slimy and scaly things darting through the shafts of sunlight probing the floors of shallow equatorial seas.

Uplift of the Taconic range and associated mountain-building led to rapid erosion, and the outwash sediments were deposited in marine environments that covered what’s now the Midwest, including Illinois.

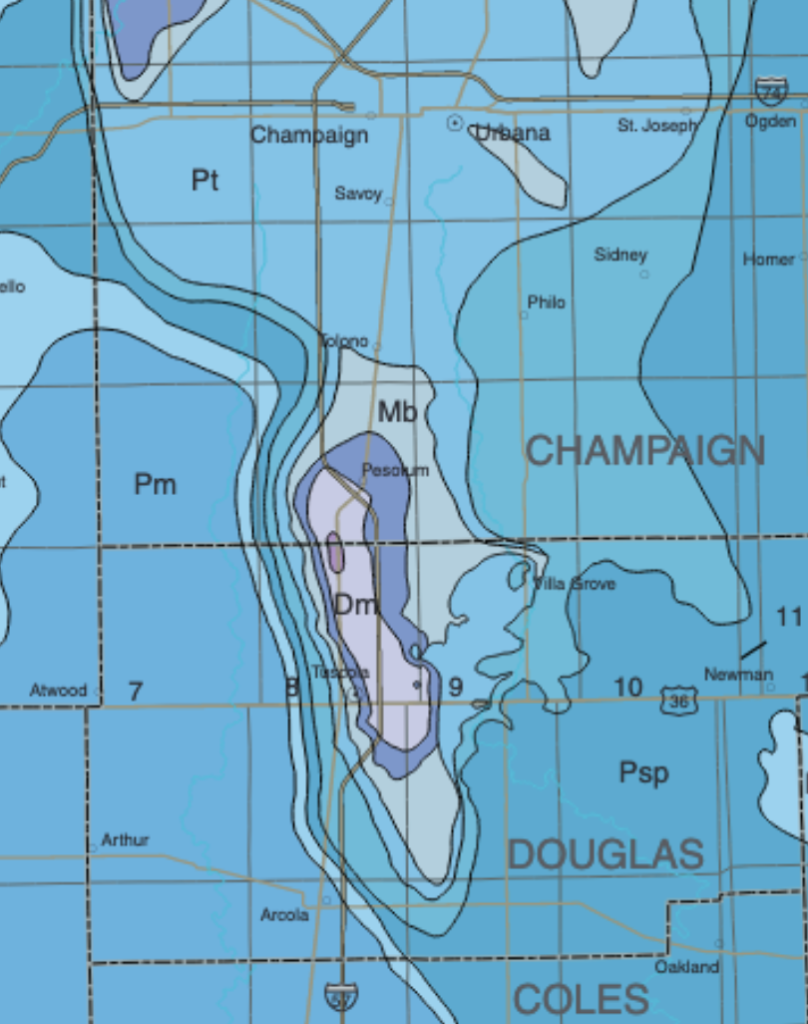

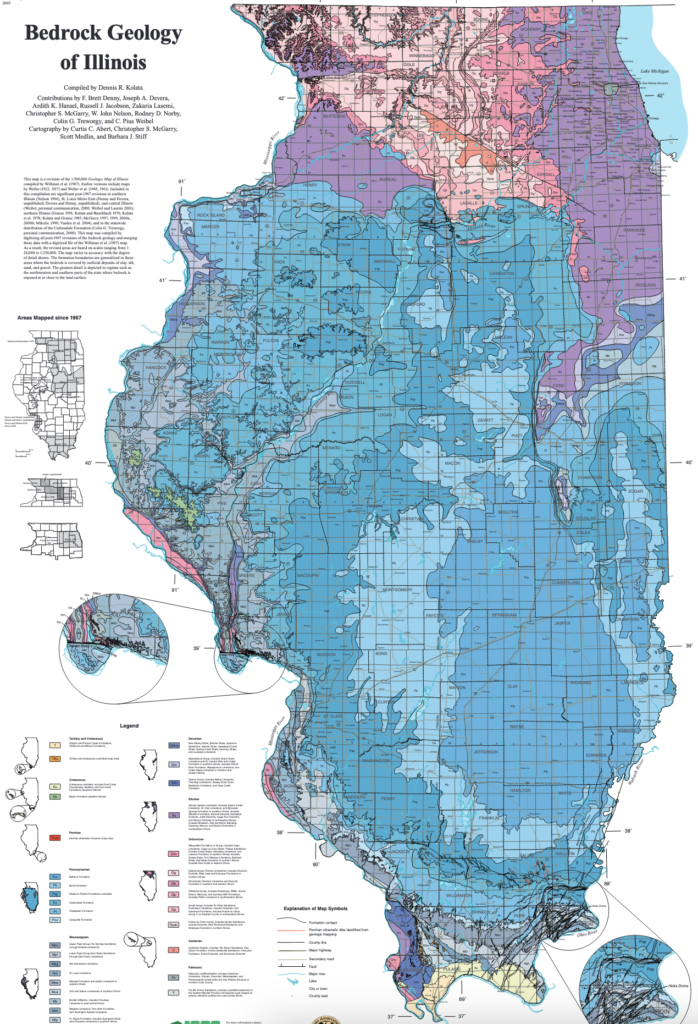

The geologic map of Illinois is known to be a sure way to drive most casual site visitors away:

The youngest bedrock strata in Illinois are graded in the shades of blue. The map indicates that a shellacking of sedimentary layers fills a wide structural basin — a several-hundred mile bathtub-like depression in the billion-year-old granite basement. The sag is deepest in the southeast region of the state, where the uppermost layer of paleozoic sediment is the youngest, of order 300 million years. The bedrock layers then grow successively older as one moves in any direction from the center of the basin.

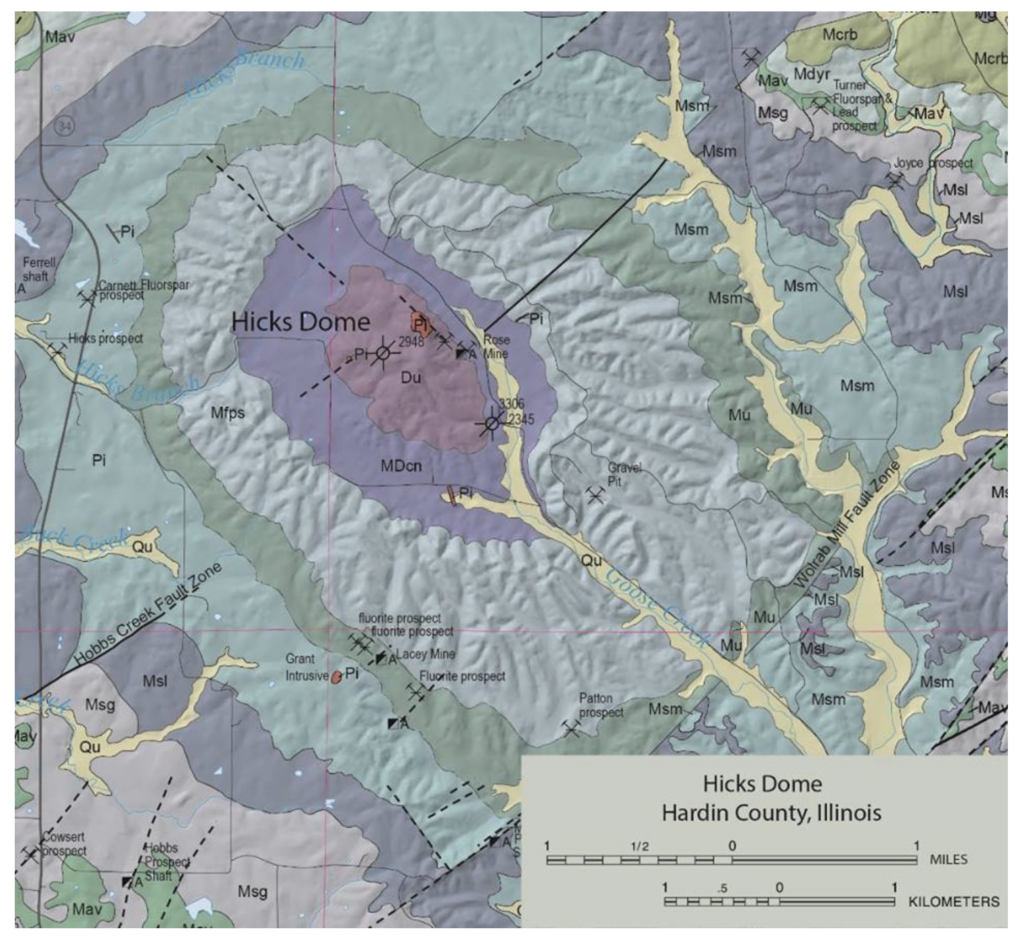

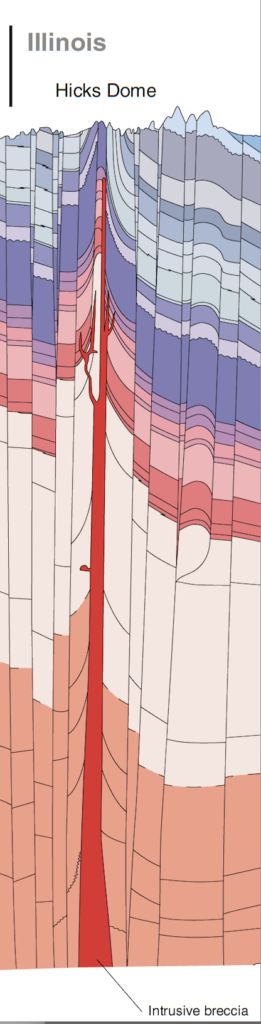

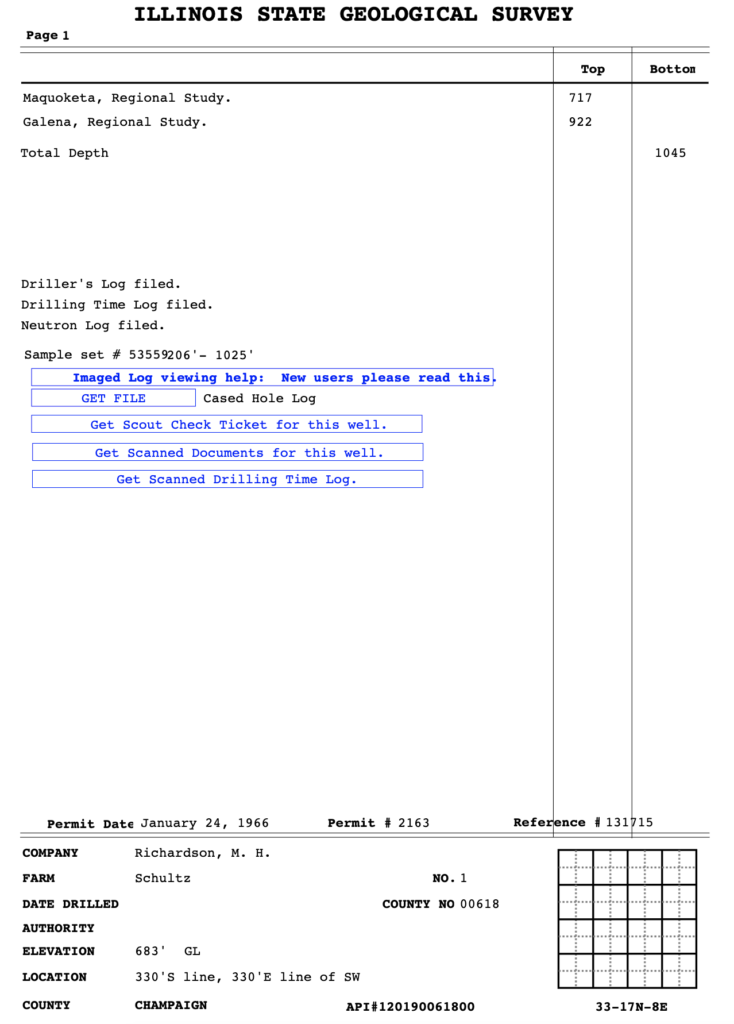

Illinois has produced a lot of oil, which means that a lot of drill cores were logged over the years, and thus the stratigraphy of the basin has been extensively probed. Occasionally, weird anomalies are found spiking up into the dull layers of sediment — Omaha Dome, Hicks Dome, the Champaign Uplift.

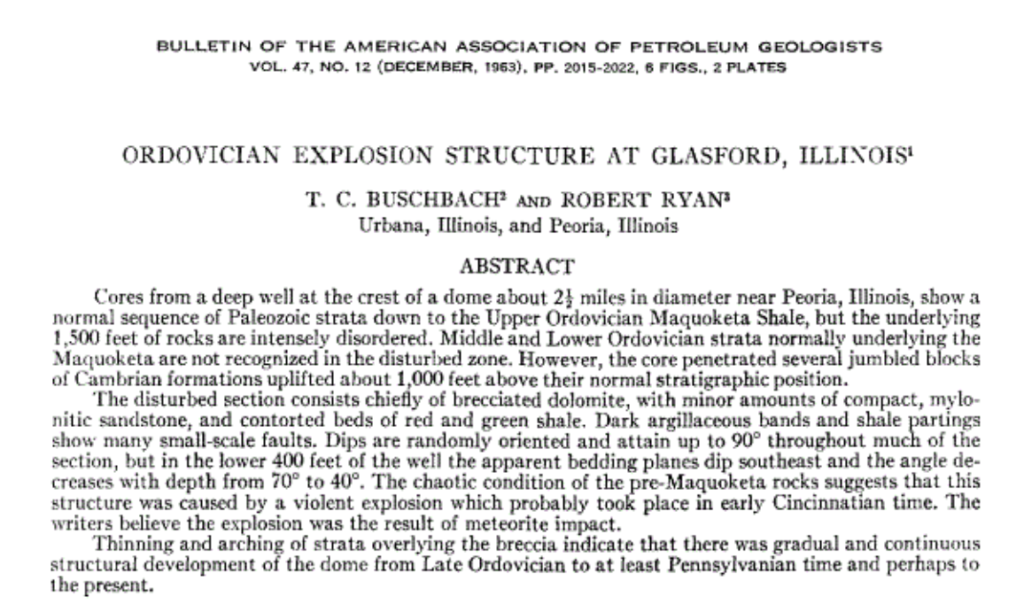

In 1963, an oddity near Peoria was reported in the Bulletin of the American Association of Petroleum Geologists:

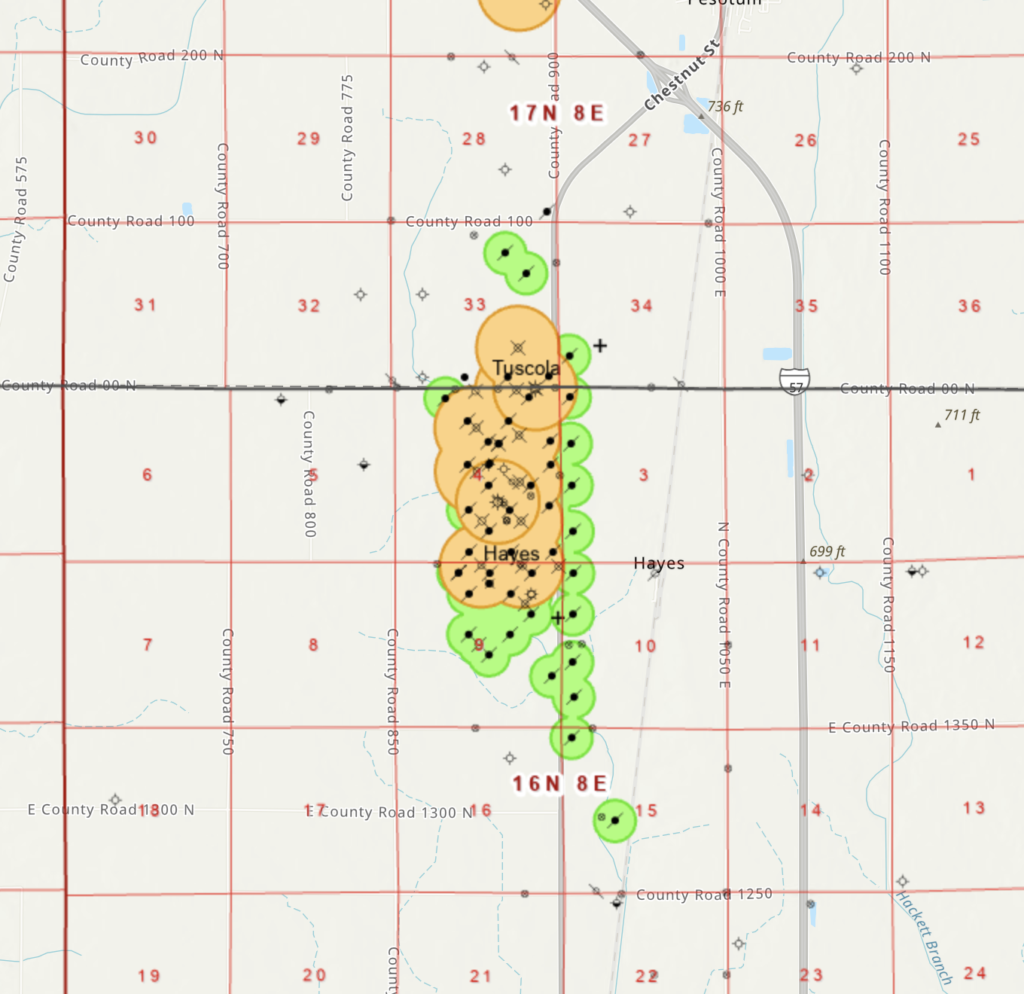

A map from a more recent article shows the location of the above-described Glasford Structure within the Illinois Basin:

As is the case with the other Illinois Basin anomalies, Google Street View within the Glasford Structure bears no sign whatsoever of the chaos beneath the cornfields.

Recent work has confirmed that the Glasford structure is indeed a buried meteorite crater, with a diameter of about 4 km. Some iteration with Jay Melosh’s crater diameter estimator indicates that the responsible object was likely of order 200 meters in diameter. It would have been an unexceptional asteroid, roughly the size of Itokawa, before delivering its 200-megaton Sunday punch.

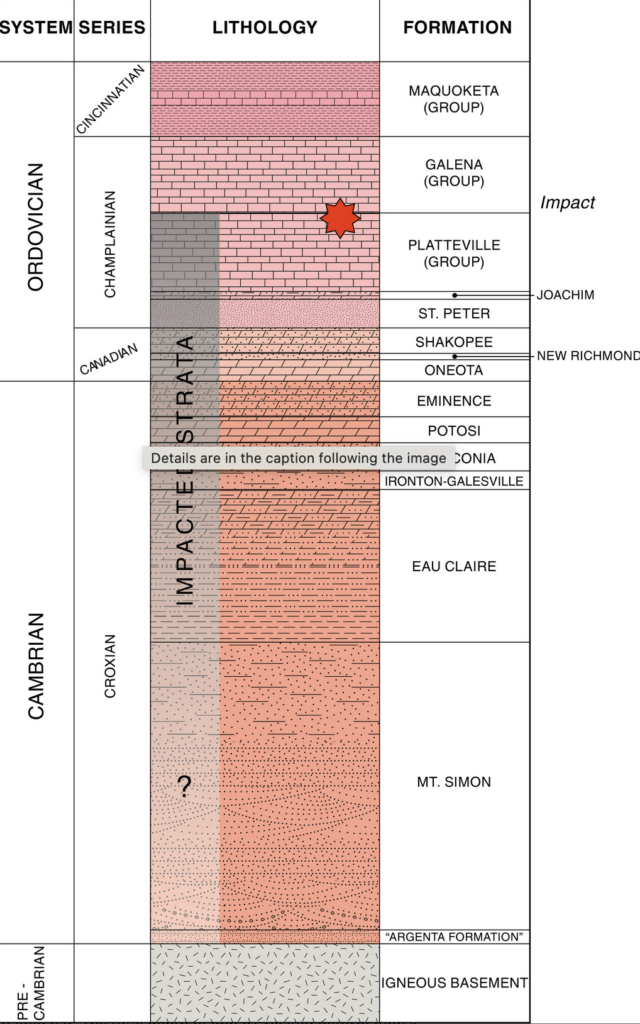

Within the Glasford structure, the region of shattered morphology lies under the Galena group of sediments. This permits the age of the buried crater to be pinned down at 450Myr (with an uncertainty of a few million years).

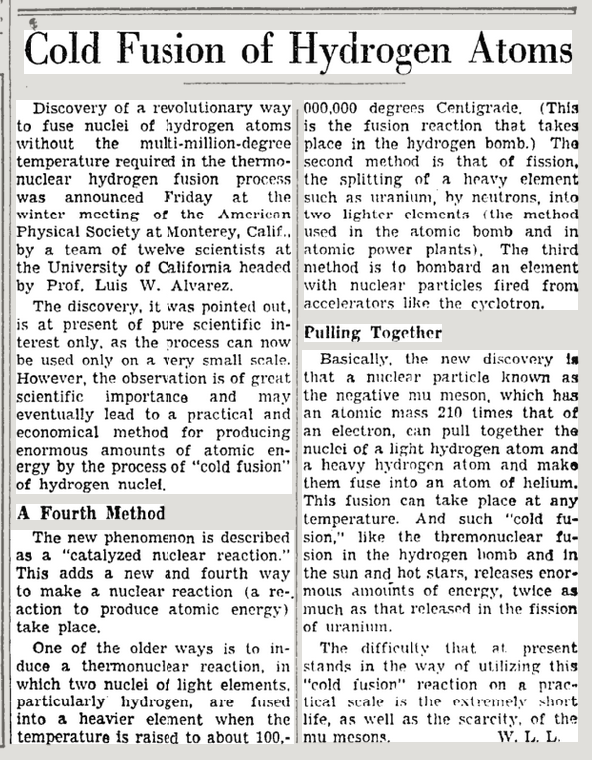

Interestingly, the now-buried Glasford crater is one member of a larger collection of twenty-one known impact structures that have been dated to a roughly 40-million year span that occurred about 450 million years ago. This is much higher than what one would expect from the baseline cratering rate, and it thus serves as strong evidence that Earth was taking an outsize beating during the mid-Ordovician. The leading hypothesis is that the impact shower stemmed from a major collision in the asteroid belt about 470 Myr ago that destroyed the L-chondrite parent body (a large asteroid). Over an extended period following that disaster, some of the collisional debris, which included scores of multi-kilometer wide fragments, evolved onto Earth-crossing orbits and was responsible for the spike in the cratering rate.

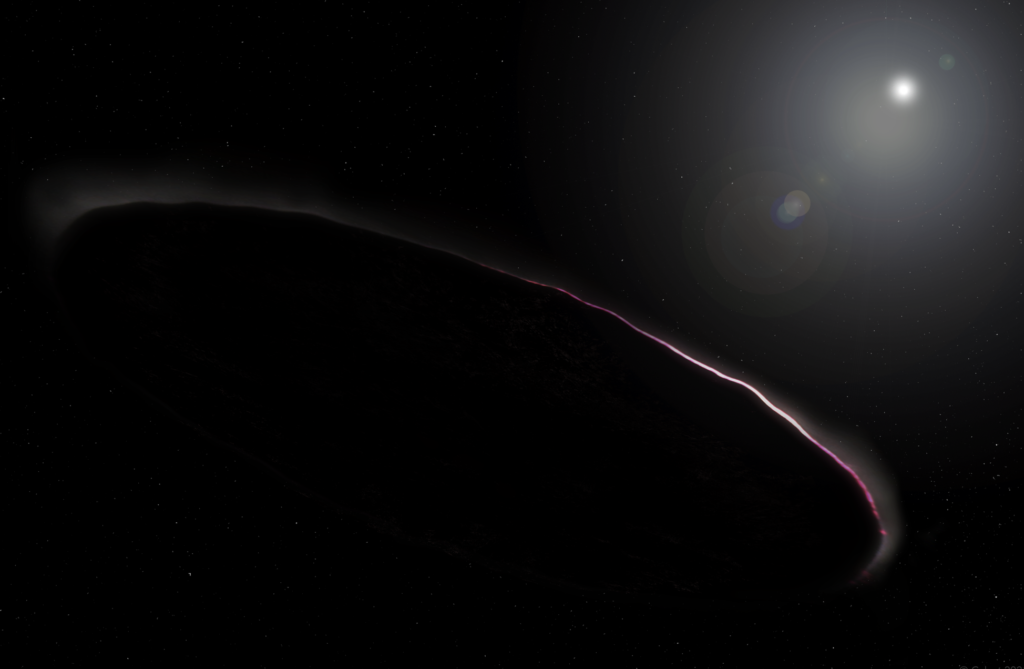

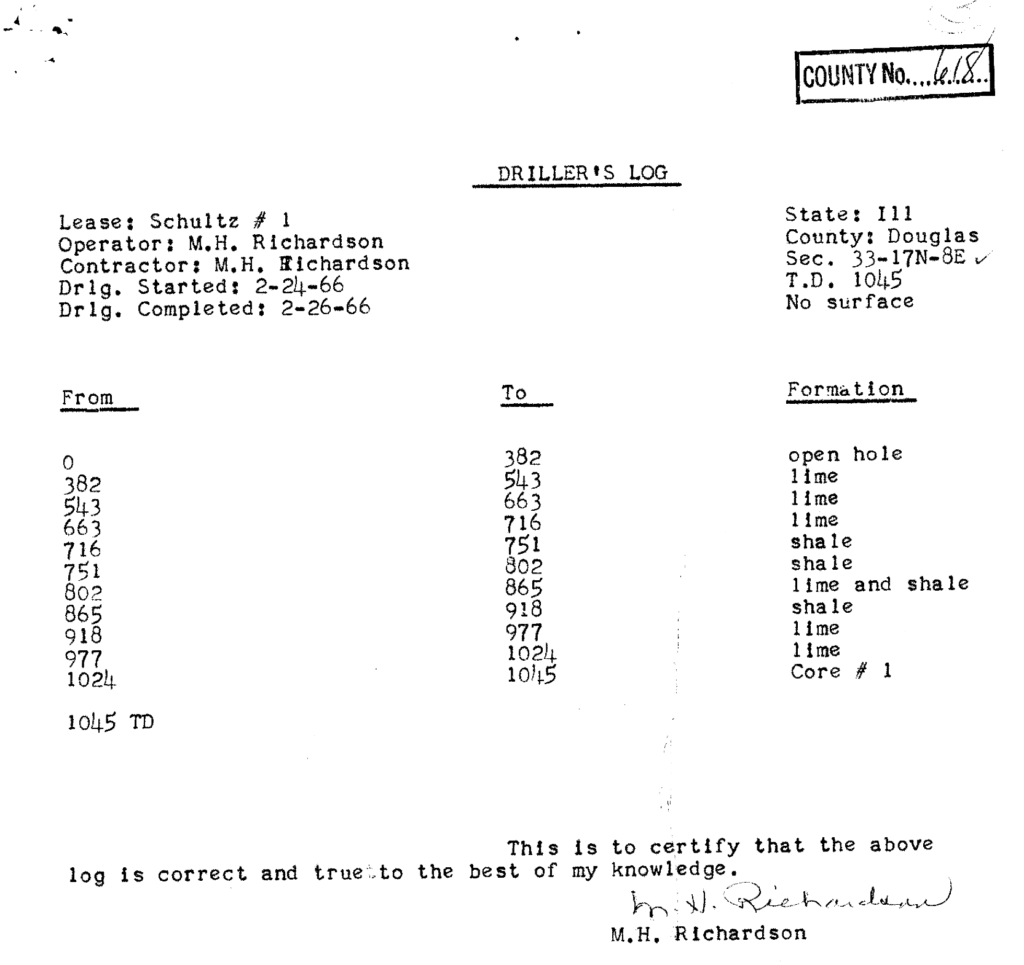

A paper that presents a twist on this explanation has received some media action recently, including a write-up in the New York Times. The idea is that a large bolus of the L-chondrite debris, perhaps in the form of a body like the Martian moon Phobos, was tidally captured by the Earth, and then dynamically evolved to form to a ring. Over time, the material in the erstwhile ring, which would have included multi-km objects that were effectively small temporary moons, is imagined to have inclination-damped to equatorial orbits while simultaneously experiencing atmospheric drag and tidal decay which eventually brought it all down to the surface. This is interesting because, if true, the Ordovician impacts would all have been near (if not right on) the equator, which in turn provides an important anchor point for Earth’s paleo-digital elevation models (see this post). But is the ring hypothesis plausible? The Glasford crater looks to every indication like a normal impact scar. Could the structure plausibly be the outcome of an asteroid which came barreling in along the ancient equator from a perilous low-Earth orbit?

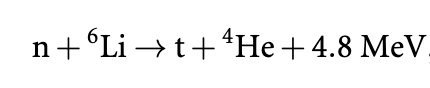

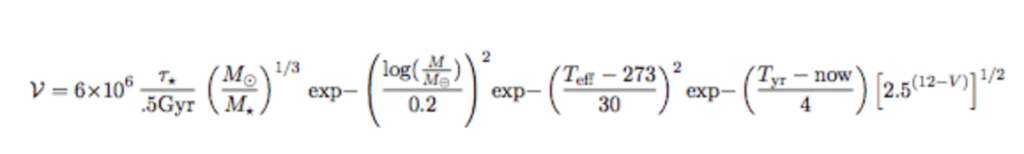

In thinking about this, I’ve arrived at the conclusion that a de-orbiting asteroid produces quite a spectacle. As a naive first approximation, let’s assume that the asteroid is a sphere, and is made of that “super tough” material that’s been a recent focus of discussion over at Harvard.

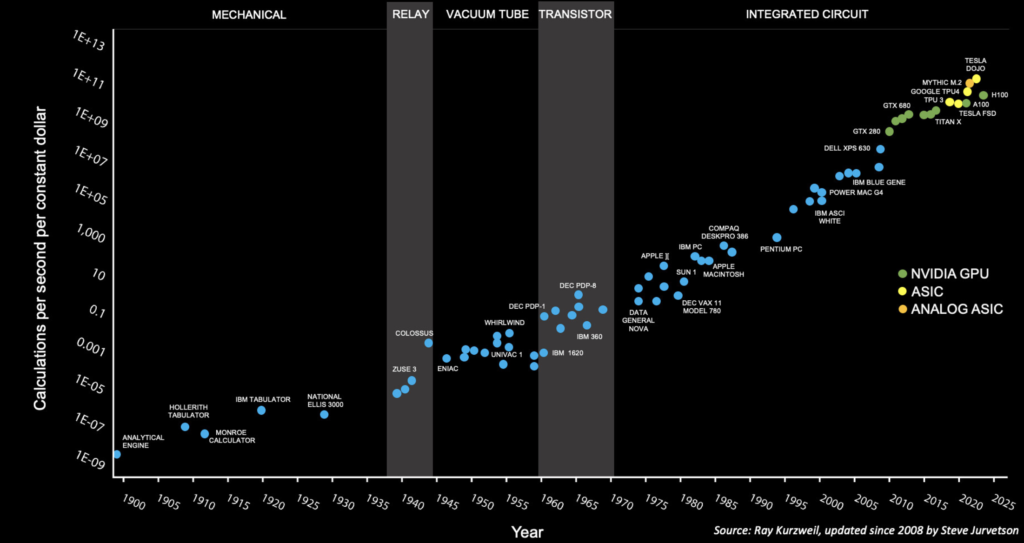

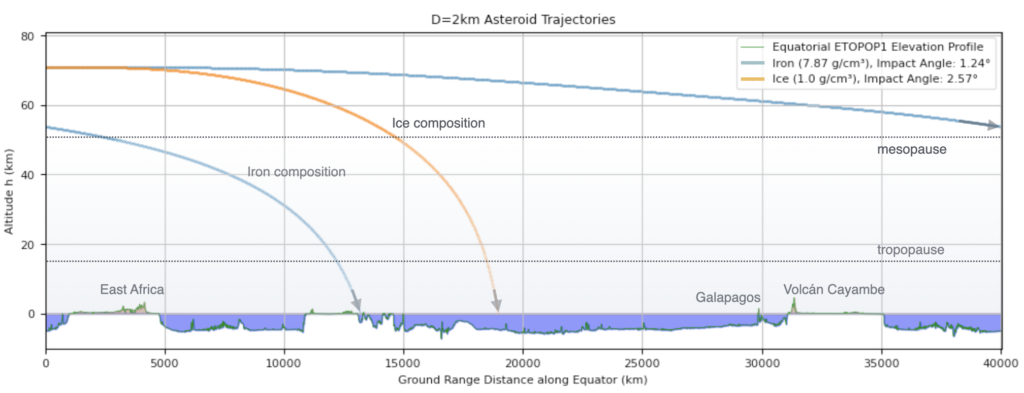

Super-tough asteroids are just that. Tough. They don’t pancake or break up in response to the transverse pressure gradients imparted by atmospheric drag. If we adopt the isothermal approximation to Earth’s atmosphere, and an iron super-tough composition, we can calculate that when the asteroid has lowered its circular orbit to 71 km altitude (in the middle of the mesosphere), the acceleration from atmospheric drag is one ten-thousandth of the gravitational acceleration. We can then integrate the trajectory, assuming that gravity and drag are the only forces acting. The integration indicates that a 2-km iron asteroid makes roughly 1 1/3 full trips around Earth before reaching sea level with a velocity of 7.43 km/sec (close to its original orbital velocity). When the asteroid reaches the surface in the super-tough approximation, it’s effectively in an e=0.01orbit, and it impacts with an angle of slightly more than 1.24 degrees. Here’s the trajectory over the current-day elevation profile for the equator. Ironically, the impact point here is very close to the the site of a recent expedition. (No further comment on that).

I never saw the 1979-vintage disaster movie, Meteor, but I did read the comic book, which left quite an impression, especially this two-page spread, where a ~50-meter wide fragment of an asteroid rampages through Midtown at an extremely oblique angle.

The impactor’s behavior in the comic book, in particular its remarkable lack of a hypersonic shock wave, brings to mind the earnest freshman honors-level calculations for UFOs published by Knuth, Powell & Reali (2019), e.g.

“… The UAP was estimated to be approximately the same size as an F/A-18 Super Hornet, which has a weight of about 32000 lbs, corresponding to 14550 kg. Since we want a minimal power estimate, we took the acceleration as 5370 g and assumed that the UAP had a mass of 1000 kg. The UAP would have then reached a maximum speed of about 46000 mph during the descent, or 60 times the speed of sound…”

What really happens when a mountain-sized asteroid comes skimming horizontally with orbital velocity? It doesn’t sound like a fun experience. I’ve looked through the literature, and have not been able to source modern simulations of impacts that have obliquities of order on degree, where topography matters. This seems like a good computational project for the iSALE-3D code. Stay tuned…